AI-Prompting for Large Language Models (LLMs)

I can help you with AI-Prompting. But why do you need help?

AI-Prompting is an approach to elicit solutions or answers from so-called Large Language

Model AI systems. What these systems do is basically to construct a chain of output tokens (words

or, say, programming language statements) according to their pre-trained sequencing probabilities in

accordance to the input question. Thus, the results these systems produce are highly sensitive to the

question or ‚prompt’ that triggers the generation of the output. This is easiest to see, I think, when

you use different languages in the prompt: A question asked in German will result in an answer in

German, and, as the sequencing rules for German are based on a different training corpus than those

of e..g. English, the contents of the output will differ from the output to a prompt in English as well.

Further, it cannot be stated often enough: These LLM systems are not Dialogue systems. They do

not ‚understand‘ tasks and therefore they are not able identify which information is lacking to solve

the problem posed and request it from the user. So, if your want such a system to deliver a solution

to a specific task, the instruction for problem solving needs to cover all relevant information in such

a way that permits the LLM system to do its best. So, here it is good if the human side is capable of

Dialogue, i.e. understanding the AI’s output, evaluating it with respect to the intended task, and, if

necessary, reformulate the prompt to achieve a more appropriate result, perhaps also comparing the

results for different language inputs.

Thirdly, as these LLM-AI systems are opened (via e.g. by a chatbot-like interface ChatGPT to

the GPT-3 family of LLMs) to the general public for (somewhat) supervised learning, their

behaviour changes over time rather quickly. So, you need to keep tracking the development of these

systems.

And yes, when Google releases its ‚Taskbot‘ based on ‚LaMDA’, things may take a whole new

turn again!

Below, I give you examples above from ChatGPT.

- I know what the problem is with LLM-AIs and what you need to get better results

- I have 30+ years of experience in AI, in particular in all aspects of Dialogue systems (cf. my publications and patents), as well as many contacts in the industry

- I am fluent in English, German and French, and have decent knowledge in some other languages (e.g. Dutch, Italian, Danish, …)

- I have a large body of common knowledge to judge the facticity and credibility of LLM output

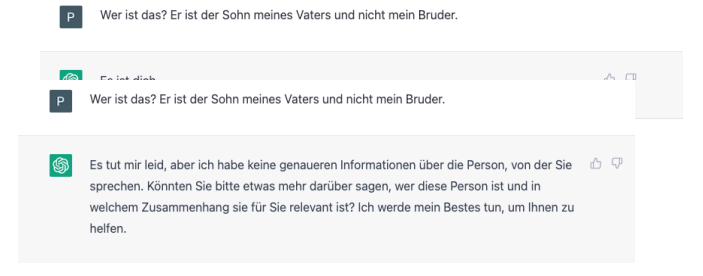

This is what happens if an AI has no ‚world knowledge‘ at all of particular area, in early December

2022: Who is this? My father’s son but not my brother.

(Sorry, but I have no more specific information of the person you are talking about. Could you

please say a bit more as to who that person is in in which context that person is relevant to you?)

Over time, some world knowledge was added (End of December 2022):

End of January, 2023, the users’ feedback had taught the right answer to the English version:

In the German version, the factual information had - apparently - been taken over, but the grammar had not followed suit!

What else can I help you with?

I'm happy to answer questions! Please contact me!